FineWeb, a newly released open-source dataset, promises to propel language model research forward with its extensive collection of English web data. Developed by a consortium led by huggingface, FineWeb offers over 15 trillion tokens sourced from CommonCrawl dumps spanning the years 2013 to 2024.

Designed with meticulous attention to detail, FineWeb undergoes a thorough processing pipeline using the datatrove library. This ensures that the dataset is cleaned and deduplicated, enhancing its quality and suitability for language model training and evaluation.

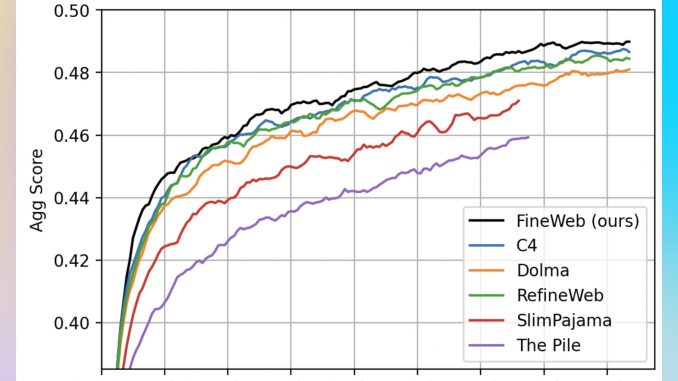

One of FineWeb’s key strengths lies in its performance. Through careful curation and innovative filtering techniques, FineWeb outperforms established datasets like C4, Dolma v1.6, The Pile, and SlimPajama in various benchmark tasks. Models trained on FineWeb demonstrate superior performance, showcasing its potential as a valuable resource for natural language understanding research.

Transparency and reproducibility are central tenets of FineWeb‘s development. The dataset, along with the code for its processing pipeline, is released under the ODC-By 1.0 license, enabling researchers to replicate and build upon its findings with ease. FineWeb also conducts extensive ablations and benchmarks to validate its efficacy against established datasets, ensuring its reliability and usefulness in language model research.

FineWeb’s journey from conception to release has been marked by meticulous craftsmanship and rigorous testing. Filtering steps such as URL filtering, language detection, and quality assessment contribute to the dataset’s integrity and richness. Each CommonCrawl dump is deduplicated individually using advanced MinHash techniques, further enhancing the dataset’s quality and utility.

As researchers continue to explore the possibilities offered by FineWeb, it promises to serve as a valuable resource for advancing natural language processing. With its vast collection of curated data and commitment to openness and collaboration, FineWeb holds the potential to drive groundbreaking research and innovation in the field of language models.

In conclusion, FineWeb represents a significant step in the quest for better language understanding. While not without its challenges, it offers a promising foundation for future research and development in natural language processing.

Niharika is a Technical consulting intern at Marktechpost. She is a third year undergraduate, currently pursuing her B.Tech from Indian Institute of Technology(IIT), Kharagpur. She is a highly enthusiastic individual with a keen interest in Machine learning, Data science and AI and an avid reader of the latest developments in these fields.

Be the first to comment