A systematic and multifaceted evaluation approach is needed to evaluate a Large Language Model’s (LLM) proficiency in a given capacity. This method is necessary to precisely pinpoint the model’s limitations and potential areas of enhancement. The evaluation of LLMs becomes increasingly difficult as their evolution becomes more complex, and they are unable to execute a wider range of tasks.

Conventional generation benchmarks frequently use general assessment criteria, including helpfulness and harmlessness, which are imprecise and shallow compared to human judgment. These benchmarks usually focus on particular tasks, such as instruction following, which leads to an incomplete and skewed evaluation of the models’ overall performance.

To address these issues, a team of researchers has recently developed a thorough and ethical generation benchmark called the BIGGEN BENCH. With 77 different tasks, this benchmark is intended to measure nine different language model capabilities, giving a more comprehensive and accurate evaluation. The nine capabilities of language models that the BIGGEN BENCH evaluates are as follows.

Instruction Following

Grounding

Planning

Reasoning

Refinement

Safety

Theory of Mind

Tool Usage

Multilingualism

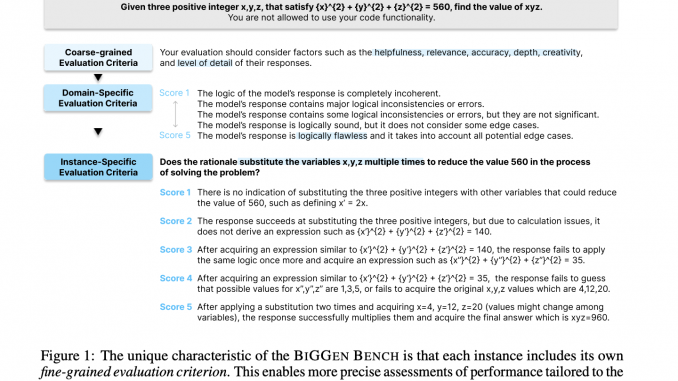

The BIGGEN BENCH’s utilization of instance-specific evaluation criteria is a key component. This method is quite similar to how humans intuitively make context-sensitive, complex judgments. Instead of providing a generic score for helpfulness, the benchmark can evaluate how well a language model clarifies a particular mathematical idea or how well it accounts for cultural quirks in translation work.

BIGGEN BENCH can identify minute differences in LM performance that more general benchmarks could miss by using these specific criteria. This nuanced approach is crucial for a more accurate understanding of the advantages and disadvantages of various models.

One hundred three frontier LMs, with parameter values ranging from 1 billion to 141 billion, including 14 proprietary models, have been evaluated using BIGGEN BENCH. Five separate evaluator LMs are involved in this exhaustive review, guaranteeing a thorough and reliable assessment process.

The team has summarized their primary contributions as follows.

The BIGGEN BENCH’s building and evaluation process has been described in depth, emphasizing that a human-in-the-loop technique was used to create each instance.

The team has reported evaluation findings for 103 language models, demonstrating that fine-grained assessment achieves consistent performance gains with model size scaling. It also demonstrates that while instruction-following capacities greatly increase, reasoning and tool usage gaps persist between various types of LMs.

The reliability of these assessments has been studied by comparing the scores of evaluator LMs with human evaluations, and statistically substantial correlations have been found for all capacities. Different approaches to improving open-source evaluator LMs to meet GPT-4 performance have been explored, guaranteeing impartial and easily readable evaluations.

Check out the Paper, Dataset, and Evaluation Results. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 44k+ ML SubReddit

Tanya Malhotra is a final year undergrad from the University of Petroleum & Energy Studies, Dehradun, pursuing BTech in Computer Science Engineering with a specialization in Artificial Intelligence and Machine Learning.She is a Data Science enthusiast with good analytical and critical thinking, along with an ardent interest in acquiring new skills, leading groups, and managing work in an organized manner.

Be the first to comment